Implement and manage storage in Azure

February 23, 2021

Azure webinar series: Build Smarter and Faster .NET Web Apps with Azure

February 23, 2021Data driven enterprises are transforming their businesses by migrating their on-prem data lakes and data warehouses to the cloud so they can enable new analytics at scale. As every enterprise looks to migrate, it’s important that IT leaders don’t forget about a key data stakeholder, the data scientist. Open source software (OSS) and libraries are a crucial piece of a data scientists toolkit and we’ve made significant progress to make OSS easier to manage for data scientists on Google Cloud’s data analytics platform.

Data scientists rely on a suite of powerful open source applications to work on solving the world’s biggest challenges. With the integration of leading open source tools like Dask and RAPIDS on Google Cloud, the Dataproc team is making NVIDIA GPU-accelerated data science at scale more accessible.Scott McClellan

Sr Director, Data Science Product Group, NVIDIA

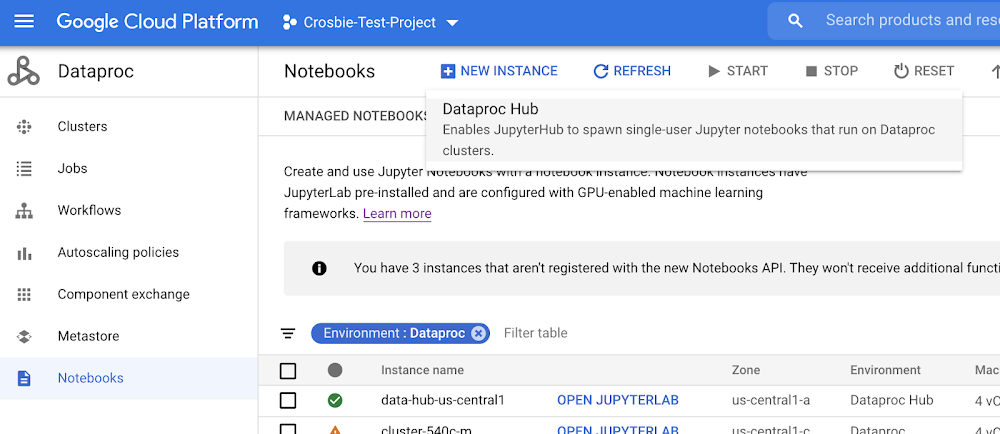

Dataproc Hub feature is now generally available: Secure and scale open source machine learning

Dataproc Hub, a feature now generally available for Dataproc users, provides an easier way to scale processing for common data science libraries and notebooks, govern custom open source clusters, and manage costs so that enterprises can maximize their existing skills and software investments. Dataproc Hub features include:

- Ready to use big data frameworks including JupyterLab with BigQuery, Presto, PySpark, SparkR, Dask, and Tensorflow on Spark.

- Access to custom Dataproc clusters within an isolated and controlled data science sandbox. Data scientists do not have to rely on IT to make changes to the programming environment.

- Access to BigQuery, Cloud Storage and AI Platform using the notebook users’ credentials ensures that permissions are always in sync and the right data is available to the right users.

- IT cost controls that include the ability to set auto scaling policies, CPU/RAM sizes and NVIDIA GPUs, auto-deletions and timeouts, and more.

- Integrated security controls including custom image versions, locations, VPC-SC, AXT, CMEK, Sole tenancy, shielded VMs, Apache Ranger, and Personal Cluster Authentication, to name a few.

- Easy to generate templated Dataproc configurations that can be reused for other clusters based on existing Dataproc clusters. A simple export is all that is needed.

The current state of open source machine learning on Google Cloud

Dataproc Hub was created by working in partnership with several companies that were facing rapid adoption of cloud sized datasets (big data), machine learning, and IoT. These new and large datasets were coupled with data analysis techniques and tools that simply do not fit into the traditional data warehousing model. Data science teams were combining methodologies across ETL (creating their own data structures), administration (using programming skills to configure resource sizing), and reporting (using Jupyter notebooks for exchanging data results). In addition, data scientists often work with unstructured data, which does not follow the same table/view permissions model as the data warehouse.

The IT leaders we worked with wanted an easy way to control and secure data science environments. They also wanted to maintain production stability, control costs, and ensure security and governance controls were being met. They asked us to simplify the process of creating a secured data science environment that could serve as an extension of their BigQuery data warehouse. At the same time, the data scientists who are setting up their own data science environments felt frustrated by having to do what they consider “IT work” such as figuring out various security connections and package installations. They wanted to focus on exploring data and building models with the tools they are familiar with.

Working with these organizations, we built Dataproc Hub to eliminate these primary concerns of both IT leaders and data science teams.

IT governed Dataproc clusters personalized to your data scientist’s use case

With Dataproc Hub, you can extend existing data warehouse investments at a cost that grows in proportion to the value without having to compromise on security and compliance standards. Dataproc Hub allows IT leaders to specify templated Dataproc clusters that can leverage a variety of controls ranging from custom images which can be used to include standard IT software such as virus protection and asset management software to autoscaling policies that let customers automatically scale their code within limits set in advance. Dataproc templates can easily be created from a running Dataproc cluster using the export command.

Customers of AI Platform Notebooks that want to use their BigQuery or Cloud Storage data for model training, feature engineering, and preprocessing will often exceed the limits of a single node machine. Data scientists also want to quickly iterate on ideas from inside the notebook environment without having to spend time packaging up their models to send off into a separate service just to try out an idea. With Dataproc Hub, data scientists can quickly tap into APIs like PySpark and Dask that are configured to autoscale to meet the demands of the data without having to do a lot of setup and configuration. They can even accelerate their Spark XGBoost pipelines with NVIDIA GPUs to process their data 44x faster at a 14x reduction in cost vs CPUs. The data scientist is in full control of the software environment spawned by Dataproc Hub and can install their own packages, libraries and configurations, achieving freedom within the framework set by IT.

Using Dataproc Hub and Python-based libraries for genomic analysis

One example of this need to balance IT guardrails with data science flexibility is in the field of genomics, where data volumes continue to explode. By 2025, an estimated 40 exabytes of storage capacity will be required for human genomic data. Researchers need the freedom to try out a variety of techniques and run large scale jobs without IT intervention. However, IT organizations need to protect personal health data that comes with genomics datasets—something that Google Cloud, Dataproc, and the open source community are well suited to help with.

If you want to see the genomic analysis we talked about above in action, please register for our upcoming webinar where we will demo Dataproc Hub.

Next steps

The Dataproc Hub feature is now generally available and ready for use today. To get started, log into the Google Cloud Console and from the Dataproc page, choose Notebooks and then “New Instance”.

Name the instance and populate the Dataproc Hub fields to configure the settings according to your standards. Alternatively, you can accept the default settings to be provided with a Dataproc Hub environment based on two example clusters.

The IP address of the Dataproc Hub can then be provided to data scientists so teams can self-provision Jupyter environments based on Dataproc clusters. When the data task is completed, the user can go to File->Return to Control Panel and then “Stop Cluster”. Cluster templates can also be set with a TTL to ensure that resources are cleaned up.