The life of a data scientist can be challenging. If you’re in this role, your job may involve anything from understanding the day-to-day business behind the data to keeping up with the latest machine learning academic research. With all that a data scientist must do to be effective, you shouldn’t have to worry about migrating data environments or dealing with processing limitations associated with working with raw data.

Google Cloud’s Dataproc lets you run cloud-native Apache Spark and Hadoop clusters easily. This is especially helpful as data growth relocates data scientists and machine learning researchers from personal servers and laptops into distributed cluster environments like Apache Spark, which offers Python and R interfaces for data of any size. You can run open source data processing on Google Cloud, making Dataproc one of the fastest ways to extend your existing data analysis to cloud-sized datasets.

We’re announcing the general availability of several new Dataproc features that will let you apply the open source tools, algorithms, and programming languages that you use today to large datasets. This can be done without having to manage clusters and computers. These new GA features make it possible for data scientists and analysts to build production systems based on personalized development environments.

You can keep data as the focal point of your work, instead of getting bogged down with peripheral IT infrastructure challenges.

Here’s more detail on each of these features.

Streamlined environments with autoscaling and notebooks

With Dataproc autoscaling and notebooks, data scientists can work in familiar notebook environments that remove the need to change underlying resources or contend with other analysts for cluster processing. You can do this by combining Dataproc’s component gateway for notebooks with autoscaling GA,

With Dataproc autoscaling, a data scientist can work on their own isolated and personalized small cluster while running descriptive statistics, building features, developing custom packages, and testing various models. Once you’re ready to run your analysis on the full dataset, you can do the full analysis within the same cluster and notebook environment, as long as autoscaling is enabled. The cluster will simply grow to the size needed to process the full dataset and then scale itself back down when the processing is completed. You don’t need to waste time trying to move over to a larger server environment or figure out how to migrate your work.

Remember that when working with large datasets in a Jupyter notebook for Spark, you may often want to stop the Spark context that is created by default and instead use a configuration with larger memory limits, as shown in the example below.

#In Jupyter you have to stop the current context first

sc.stop()

#in this example, the driver program that runs is given access to all memory on the master

conf = (SparkConf().set(“spark.driver.maxResultSize”, 0))

#restart the Spark context with your new configuration

sc = SparkContext(conf=conf)

Autoscaling and notebooks makes a great development environment for right-sizing cluster resources and working in a collaborative environment. Once you are ready to move from development to an automated process for production jobs, the Dataproc Jobs API makes this an easy transition.

Logging and monitoring for SparkR job types

The Dataproc Jobs API makes it possible to submit a job to an existing Cloud Dataproc cluster with jobs.submit call over HTTP, using the gcloud command-line tool or in the Google Cloud Platform Console itself. With the GA release of SparkR job type, you can have SparkR jobs logged and monitored, which makes it easy to build automated tooling around R code. The Jobs API also allows for separation between permissions of who has access to submit jobs on a cluster and who has permissions to reach the cluster itself. The Jobs API makes it possible for data scientists and analysts to schedule production jobs without setting up gateway nodes or networking configurations.

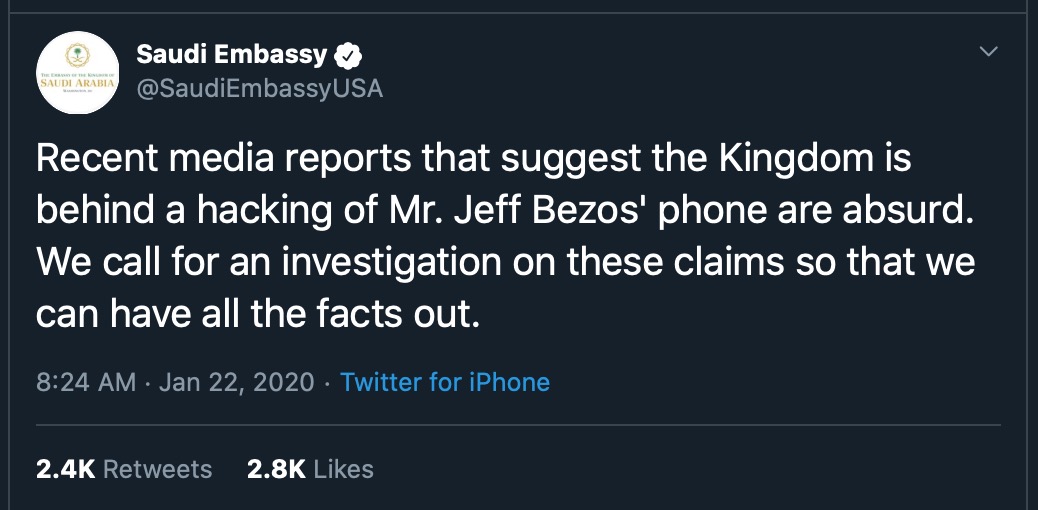

As shown in the below image, you can combine the Dataproc Jobs API with Cloud Scheduler‘s HTTP target for automating tasks such as re-running jobs for operational pipelines or re-training ML models at predetermined intervals. Just be sure that the scope and service account used in the Cloud Scheduler job have the correct access permissions to all the Dataproc resources required.