|

We hear a lot about Artificial Intelligence and Machine Learning being used for good in the Cybersecurity world – detecting and responding to malicious and suspicious behavior to safeguard the enterprise – but like many technologies, AI can be used for bad as well as for good. One area which has received increasing attention in the last couple of years is the ability to create ‘Deepfakes’ of audio and video content using generative adversarial networks or GANs. In this post, we take a look at Deepfake and ask whether we should be worried about it.

What is Deepfake?

A Deepfake is the use of machine (“deep“) learning to produce a kind of fake media content – typically a video with or without audio – that has been ‘doctored’ or fabricated to make it appear that some person or persons did or said something that in fact they did not.

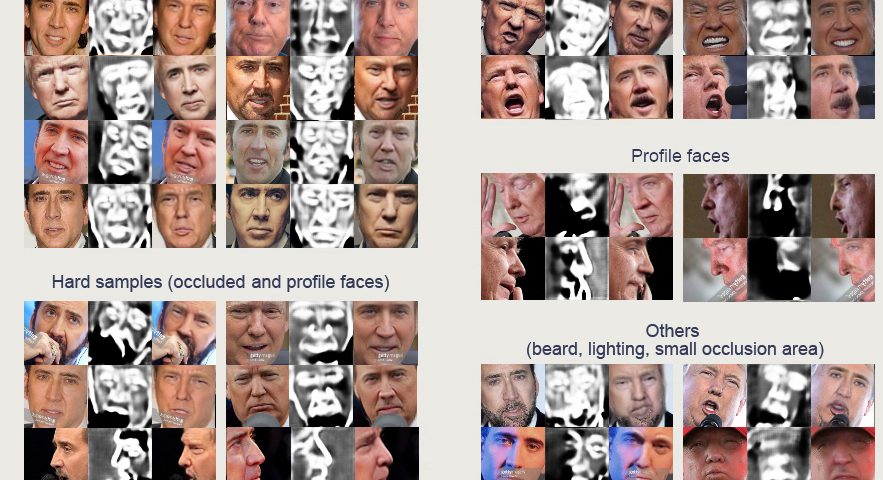

Going beyond older techniques of achieving the same effect such as advanced audio-video editing and splicing, Deepfake takes advantage of computer processing and machine learning to produce realistic video and audio content depicting events that never happened. Currently, this is more or less limited to “face swapping”: placing the head of one or more persons onto other people’s bodies and lip-syncing the desired audio. Nevertheless, the effects can be quite stunning, as seen in this Deepfake of Steve Buscemi faceswapped onto the body of Jennifer Lawrence.

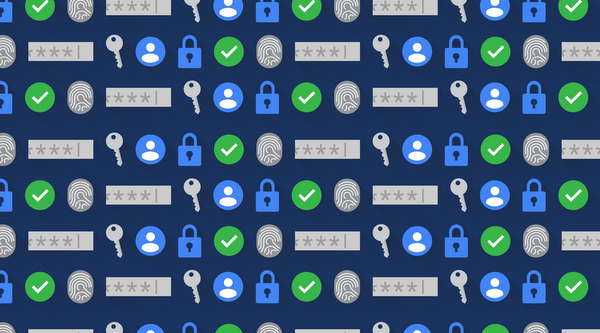

It all started in 2017 when Reddit user ‘deepfakes’ produced sleazy Deep Fakes of celebs engaged in sex acts by transposing the faces of famous people onto the bodies of actors in adult movies. Before long, many people began posting similar kinds of video until moderators banned deepfakes and the subreddit entirely.

Of course, that was only the beginning. Once the technology had been prized out of the hands of academics and turned into usable code that didn’t require a deep understanding of Artificial Intelligence concepts and techniques, many others were able to start playing around with it. Political figures, actors and other public figures soon found themselves ‘faceswapped’ and appearing all over Youtube and other video platforms. Reddit itself contains other, non-pornographic Deep Fakes such as the ‘Safe For Work’ Deepfakes subreddit r/SFWdeepfakes.

How Easy Is It To Create Deep Fakes?

While not trivial, it’s not that difficult either for anyone with average computer skills. As we have seen, where once it would have required vast resources and skills only available to a few specialists, there are now tools available on github with which anyone can easily experiment and create their own Deep Fakes using off-the-shelf computer equipment.

At minimal, you can do it with a few Youtube URLs. As Gaurav Oberoi explained in this post last year, anyone can use an automated tool that takes a list of half a dozen or so Youtube videos of a few minutes each and then extracts the frames containing the faces of the target and substitute.

The software will inspect various attributes of the original such as skin tone, the subject’s facial expression, the angle or tilt of the head and the ambient lighting, and then try to reconstruct the image with the substitute’s face in that context. Early versions of the software could take days or even weeks to churn through all these details, but in Oberoi’s experiment, it only took around 72 hours with an off-the-shelf GPU (an NVIDIA GTX 1018 TI) to produce a realistic swap.

Although techniques that use less-sophisticated methods can be just as devastating, as witnessed recently in the doctored video of CNN journalist Jim Acosta and the fake Nancy Pelosi drunk video, real Deep Fakes use a method called generative adversarial networks (GANs). This involves using two competing machine learning algorithms in which one produces the image and the other tries to detect it. When a detection is made, the first AI improves the output to get past the detection. The second AI now has to improve its decision-making to spot the fakery and improve its detection. This process is iterated multiple times until the Deep Fake-producing AI beats the detection AI or produces an image that the creator feels is good enough to fool human viewers.

Access to GANs is no longer restricted to those with huge budgets and supercomputers.

How Do Deep Fakes Affect Cybersecurity?

Deep Fakes are a new twist on an old ploy: media manipulation. From the old days of splicing audio and video tapes to using photoshop and other editing suites, GANs offer us a new way to play with media. Even so, we’re not convinced that they open up a specifically new channel to threat actors, and articles like this one seem to be stretching their credibility when they try to exaggerate the connection between Deep Fakes and conventional phishing threats.

Of course, Deep Fakes do have the potential to catch a lot of attention, circulate and go viral as people marvel at fake media portraying some unlikely turn of events: a politician with slurred speech, a celebrity in a compromising position, controversial quotes from a public figure and the like. By creating content with the ability to attract large amounts of shares, it’s certainly possible that hackers could utilize Deep Fakes in the same way as other phishing content by luring people into clicking on something that has a malicious component hidden inside, or stealthily redirects users to malicious websites while displaying the content. But as we already noted above with the Jim Acosta and Nancy Pelosi fakes, you don’t really need to go deep to achieve that effect.

The one thing we know about criminals is that they are not fond of wasting time and effort on complicated methods when perfectly good and easier ones abound. There’s no shortage of people falling for all the usual, far simpler phishing bait that’s been circulating for years and is still clearly highly effective. For that reason, we don’t see Deep Fakes as a particularly severe threat for this kind of cybercrime at the time being.

That said, be aware that there have been a small number of reported cases of Deep Fake voice fraud in attempts to convince company employees to wire money to fraudulent accounts. This appears to be a new twist on the business email compromise phishing tactic, with the fraudsters using Deep Fake audio of a senior employee issuing instructions for a payment to be made. It just shows that criminals will always experiment with new tactics in the hope of a payday and you can never be too careful.

Perhaps of greater concern are uses of Deepfake content in personal defamation attacks, attempts to discredit the reputations of individuals, whether in the workplace or personal life, and the widespread use of fake pornographic content. So-called “revenge porn” can be deeply distressing even when it is widely acknowledged as fake. The possibility of Deep Fakes being used to discredit executives or businesses by competitors is also not beyond the realms of possibility. Perhaps the most likeliest threat, though, comes from information warfare during times of national emergency and political elections – here comes 2020! – with such events widely thought to be ripe for disinformation campaigns using Deepfake content.

How Can You Spot A Deep Fake?

Depending on the sophistication of the GAN used and the quality of the final image, it may be possible to spot flaws in a Deep Fake in the same way that close inspection can often reveal sharp contrasts, odd lighting or other disjunctions in “photoshopped” images. However, generative adversarial networks have the capacity to produce extremely high quality images that perhaps only another, generative adversarial network might be able to detect. Since the whole idea of using a GAN is to ultimately defeat detection by another generative adversarial network, that too may not always be possible.

By far the best judge of fake content, however, is our ability to look at things in context. Individual events or artefacts like video and audio recordings may be – or become – indistinguishable from the real thing in isolation, but detection is a matter of judging something in light of other evidence. To take a trivial example, it would take more than a video of a flying horse to convince us that such animals really exist. We should want not only independent verification (such as a video from another source) but also corroborating evidence. Who witnessed it take off? Where did it land? Where is it currently located? and so on.

We need to be equally circumspect when viewing consumable media, particularly when it makes surprising or outlandlish claims. Judging veracity in context is not a new approach. It’s the same idea behind multi-factor authentication; it’s the standard for criminal investigations; it underpins scientific method and, indeed, it’s at the heart of contextual malware detection as used in SentinelOne.

What is new, perhaps, is that we may have to start applying more rigour in our judgement of media content that depicts far-fetched or controversial actions and events. That’s arguably something we should be doing anyway. Perhaps the rise of Deepfake will encourage us all to get better at it.

Conclusion

With accessible tools for creating Deep Fakes now available to anyone, it’s understandable that there should be concern about the possibility of this technology being used for nefarious purposes. But that’s true of pretty much all technological innovation; there will always be some people that will find ways to use it to the detriment of others. Nonetheless, Deepfake technology comes out of the same advancements as other machine learning tools that improve our lives in immeasurable ways, including in the detection of malware and malicious actors.

While creating a fake video for the purposes of information warfare is not beyond the realms of possibility or even likelihood, it is not beyond our means to recognize disinformation by judging it in the context of other things that we know to be true, reasonable or probable. Should we be worried about Deep Fakes? As with all critical reasoning, we should be worried about taking on trust extraordinary claims that are not supported by an extraordinary amount of other credible evidence.

Like this article? Follow us on LinkedIn, Twitter, YouTube or Facebook to see the content we post.