Cloud Storage Connector is an open source Apache 2.0 implementation of an HCFS interface for Cloud Storage. Architecturally, it is composed of four major components:

-

gcs–implementation of the Hadoop Distributed File System and input/output channels

-

util-hadoop–common (authentication, authorization) Hadoop-related functionality shared with other Hadoop connectors

-

gcsio–high-level abstraction of Cloud Storage JSON API

-

util–utility functions (error handling, HTTP transport configuration, etc.) used by gcs and gcsio components

In the following sections, we highlight a few of the major features in this new release of Cloud Storage Connector. For a full list of settings and how to use them, check out the newly published Configuration Properties and gcs-core-default.xml settings pages.

Here are the key new features of the Cloud Storage Connector:

Improved performance for Parquet and ORC columnar formats

As part of Twitter’s migration of Hadoop to Google Cloud, in mid-2018 Twitter started testing big data SQL queries against columnar files in Cloud Storage at massive scale, against a 20+ PB dataset. Since the Cloud Storage Connector is open source, Twitter prototyped the use of range requests to read only the columns required by the query engine, which increased read efficiency. We incorporated that work into a more generalized fadvise feature.

In previous versions of the Cloud Storage Connector, reads were optimized for MapReduce-style workloads, where all data in a file was processed sequentially. However, modern columnar file formats such as Parquet or ORC are designed to support predicate pushdown, allowing the big data engine to intelligently read only the chunks of the file (columns) that are needed to process the query. The Cloud Storage Connector now fully supports predicate pushdown, and only reads the bytes requested by the compute layer. This is done by introducing a technique known as fadvise.

You may already be familiar with the fadvise feature in Linux. Fadvise allows applications to provide a hint to the Linux kernel with the intended I/O access pattern, indicating how it intends to read a file, whether for sequential scans or random seeks. This lets the kernel choose appropriate read-ahead and caching techniques to increase throughput or reduce latency.

The new fadvise feature in Cloud Storage Connector implements a similar functionality and automatically detects (in default auto mode) whether the current big data application’s I/O access pattern is sequential or random.

In the default auto mode, fadvise starts by assuming a sequential read pattern, but then switches to random mode upon detection of a backward seek or long forward seek. These seeks are performed by the position() method call and can change the current channel position backward or forward. Any backward seek triggers the mode change to random; however, a forward seek needs to be greater than 8 MB (configurable via fs.gs.inputstream.inplace.seek.limit). The read pattern transition (from sequential to random) in fadvise’s auto mode is stateless and gets reset for each new file read session.

Fadvise can be configured via the gcs-core-default.xml file with the fs.gs.inputstream.fadvise parameter:

-

AUTO (default), also called adaptive range reads–In this mode, the connector starts in SEQUENTIAL mode, but switches to RANDOM as soon as the first backward or forward read is detected that’s greater than

fs.gs.inputstream.inplace.seek.limitbytes (8 MiB by default). -

RANDOM–The connector will send bounded range requests to Cloud Storage; Cloud Storage read-ahead will be disabled.

-

SEQUENTIAL–The connector will send a single, unbounded streaming request to Cloud Storage to read an object from a specified position sequentially.

In most use cases, the default setting of AUTO should be sufficient. It dynamically adjusts the mode for each file read. However, you can hard-set the mode.

Ideal use cases for fadvise in RANDOM mode include:

-

SQL (Spark SQL, Presto, Hive, etc.) queries into columnar file formats (Parquet, ORC, etc.) in Cloud Storage

-

Random lookups by a database system (HBase, Cassandra, etc.) to storage files (HFile, SSTables) in Cloud Storage

Ideal use cases for fadvise in SEQUENTIAL mode include:

-

Traditional MapReduce jobs that scan entire files sequentially

-

DistCp file transfers

Cooperative locking: Isolation for Cloud Storage directory modifications

Another major addition to Cloud Storage Connector is cooperative locking, which isolates directory modification operations performed through the Hadoop file system shell (hadoop fs command) and other HCFS API interfaces to Cloud Storage.

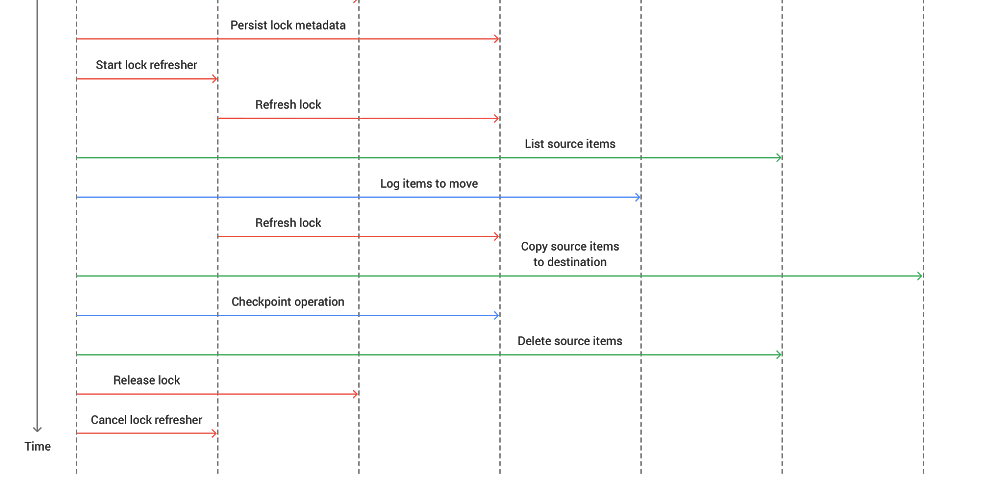

Although Cloud Storage is strongly consistent at the object level, it does not natively support directory semantics. For example, what should happen if two users issue conflicting commands (delete vs. rename) to the same directory? In HDFS, such directory operations are atomic and consistent. So Joep Rottinghuis, leading the @TwitterHadoop team, worked with us to implement cooperative locking in Cloud Storage Connector. This feature prevents data inconsistencies during conflicting directory operations to Cloud Storage, facilitates recovery of any failed directory operations, and simplifies operational migration from HDFS to Cloud Storage.

With cooperative locking, concurrent directory modifications that could interfere with each other, like a user deleting a directory while another user is trying to rename it, are safeguarded. Cooperative locking also supports recovery of failed directory modifications (where a JVM might have crashed mid-operation), via the FSCK command, which can resume or roll back the incomplete operation.

With this cooperative locking feature, you can now perform isolated directory modification operations, using the hadoop fs commands as you normally would to move or delete a folder: