Gathering source data from financial markets

Grasshopper uses data sourced from its co-located environment, where the stock exchange allocates rack space so financial companies can connect to that platform in the most efficient, lowest-latency way. Grasshopper has been using Solace PubSub+ appliances at the co-located data centers for low-latency messaging in the trading platform. Solace PubSub+ software running in GCP natively bridges the on-premises Solace environment and the GCP project. Market data and other events are bridged from on-premises Solace appliances to Solace PubSub+ event brokers running in GCP as a lossless, compressed stream. Some event brokers are on-premises, while others are on GCP, creating a hybrid cloud event mesh and allowing orders published to the on-prem brokers to flow easily to the Solace cloud brokers. The SolaceIO connector consumes this market data stream and makes it available for further processing with Apache Beam running in Cloud Dataflow. The processed data can be ingested into Cloud Bigtable, BigQuery and other destinations, processing in a pub/sub manner.

The financial market data arrives into Grasshopper’s environment in the form of deltas, which represent updates to an existing order book. Data needs to be processed in sequence for any given symbol, which requires using some advanced functions in Apache Beam, including keyed state and timers.

This financial data comes with specific financial industry processing requirements:

- Data updates must be processed in the correct order and processing for each instrument is independent.

- If there is a gap in the deltas, a request must be sent to the data provider to provide a snapshot, which represents the whole state of an order book.

- Deltas must meanwhile be queued while Ahab waits for the snapshot response.

- Ahab also needs to produce its own regular snapshots so that clients can query the state of the order book at any time in the middle of the day without applying all deltas since the start-of-day snapshot. To do this, it needs to keep track of the book state.

- The data provider needs to be monitored so that connections to it and subscriptions to real-time streams of market data can be refreshed if necessary.

How Grasshopper’s financial data pipeline works

There are two important components to processing this financial data: reading data from the source and using stateful data processing.

Reading data from the source

Grasshopper uses SolaceIO, an Apache Beam read connector, as the messaging bus for market data publication and subscriptions. The team at Grasshopper uses Solace topics streaming into Solace queues to ensure that no deltas are lost and no gaps occur. Queues (and topics) provide guaranteed delivery semantics, such that if message consumers are slow or down, Solace stores the messages till the consumers are online, and delivers the messages to the available consumers. The SolaceIO code uses checkpoint marks to ensure that messages in the queues transition from Solace to Beam in a guaranteed delivery and fault-tolerant manner–with Solace waiting for message acknowledgements before deleting them. Replay is also supported when required.

Using stateful data processing

Grasshopper uses stateful processing to keep an order book’s state for the purpose of snapshot production. The firm uses timely processing to schedule snapshot production and to also check data provider availability. One of this project’s more challenging areas was retaining the state of the market financial instrument across time window boundaries.

The keyed state API in Apache Beam let Grasshopper’s team store information for an instrument within a fixed window, also known as a keyed-window state. However, data for the instruments needs to propagate forward to the next window. Our Cloud Dataflow team worked closely with Grasshopper’s team to implement a workflow that let it have the fixed time windows flow into a global window and makes use of the timer API to ensure the Grasshopper team retained the necessary data order.

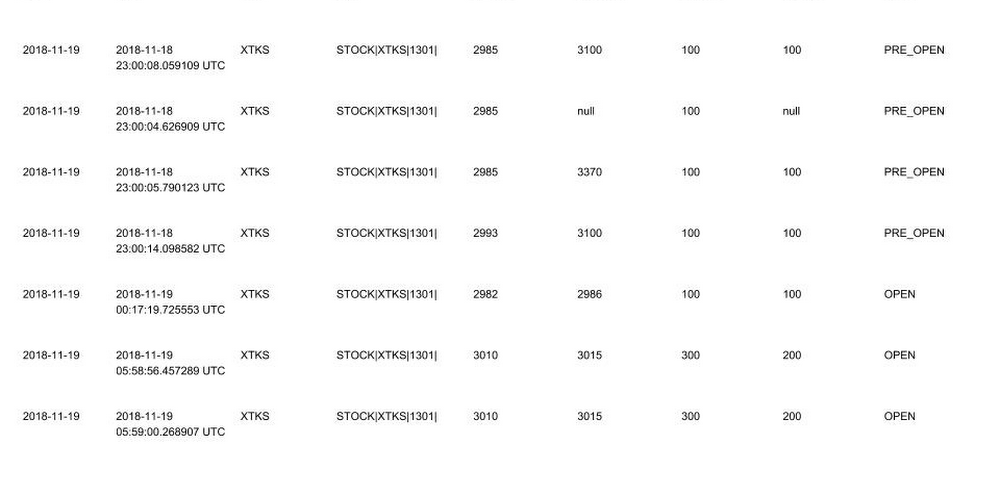

The data processed with Apache Beam is written to BigQuery in day-partitioned tables, which can then be used for further analysis. Here’s a look at some sample data: